Google Big Query provides a fantastic interface to store and query data but at a cost of $6.25 per TB queried, the price can add we pretty fast regardless of what interface is used. There is a way around this however by using the Explore with Colab notebook function to take your data out to a python notebook where it can be interrogated for free. In this article I will explain how to do this.

If you would rather watch a video tutorial, I have an explanation of this process on YouTube which you can watch from the video below. We also go in to how to set up pandas profiling for easy EDA.

https://www.youtube.com/watch?v=V11ReOIMgP4

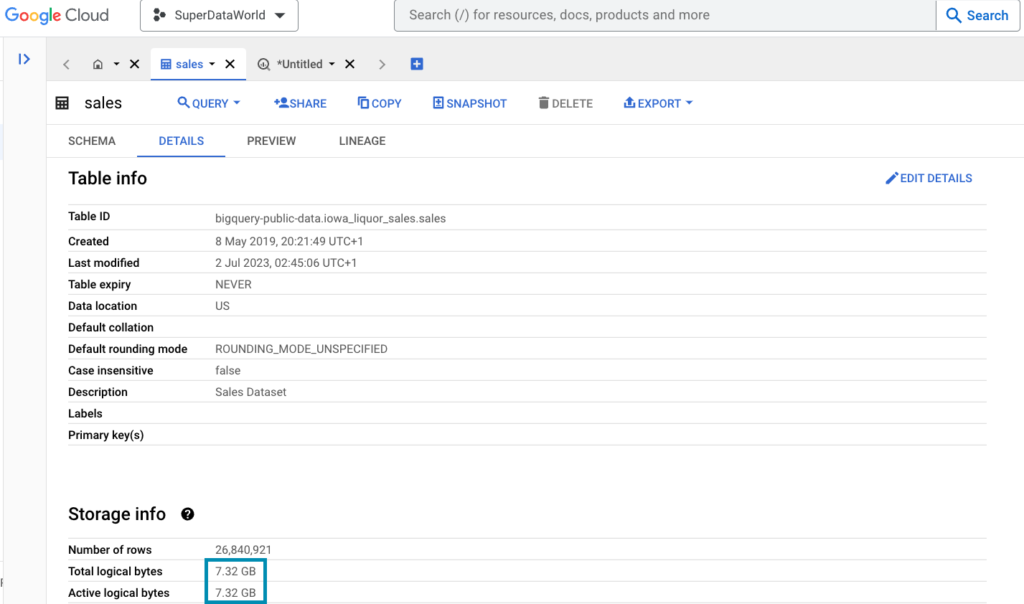

In Big Query the cost is calculated by the size of the data you are querying, I have a table in the image below of 7.32 GB, every time I query all columns of the table I will be charged 7.32 GB regardless of the size of the output.

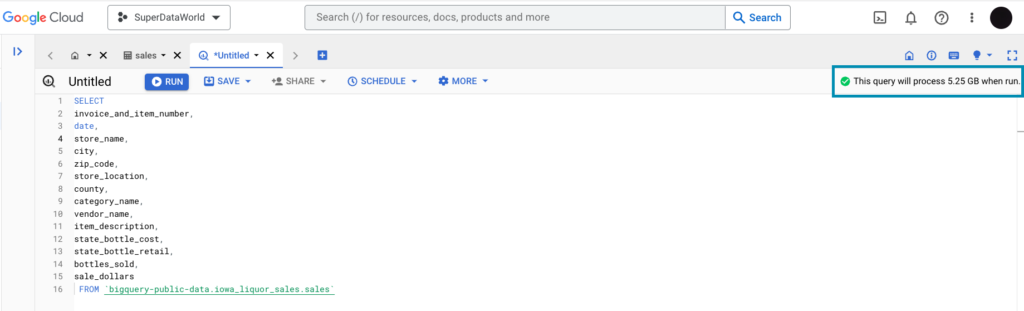

The query cost in GB is indicated on the top left of the screen when querying, you can see 5.25GB in the screenshot below as I am taking a subset of the columns above.

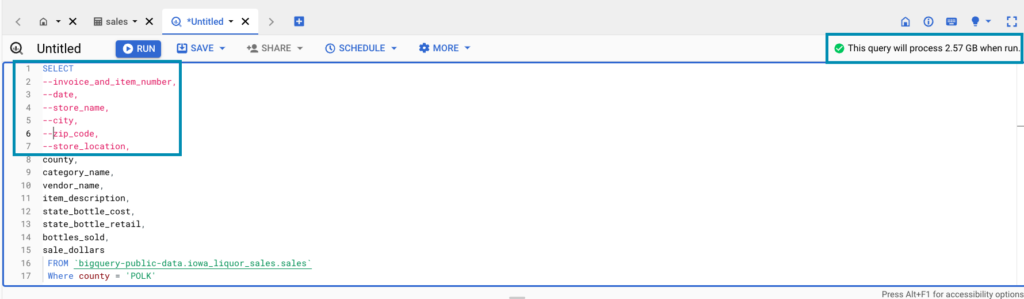

The only way to reduce this cost is to query less columns in your SQL statement but if you run fresh queries on these rows the cost will multiple with every query. So think of every fresh where clause as a multiplier.

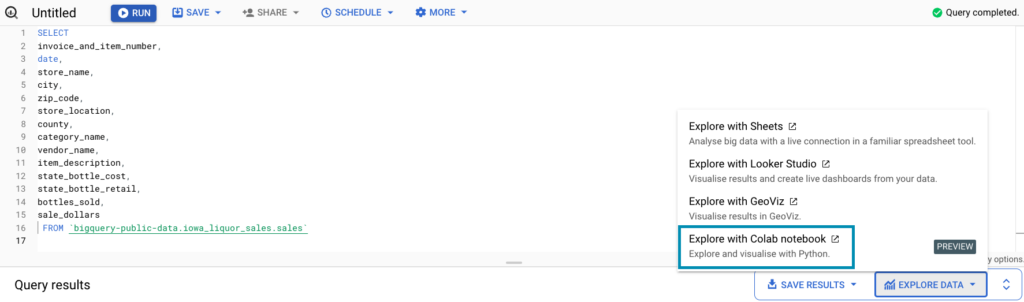

A way around this is to run a large query once and take the output Google Colab to explore the results. You can do this by running a query, clicking explore data and then export that data with Explore with Colab Notebook.

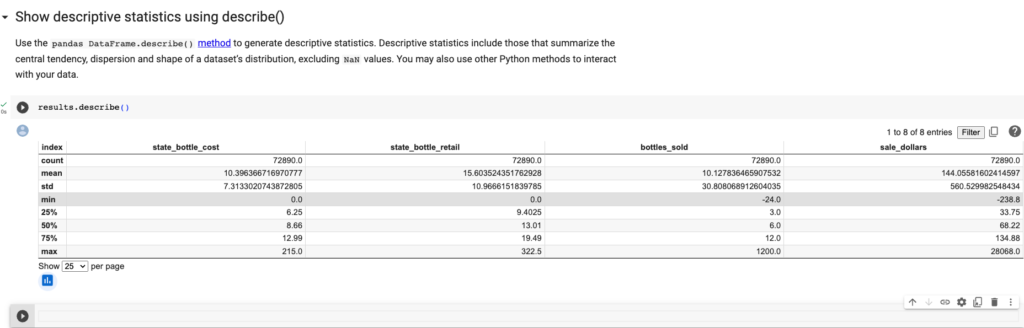

You are then transported to a Google Colab notebook where all you need to do is run a few preset cell and you can freely explore your data in python without having to multiple the cost every-time you want a new slice.

Happy Exploring